To support distributed analysis of vertical partitioned data a few additional features are currently in development. In de case of horizontal partitioned data the same algorithm is executed at all sites reporting all the same parameters. These results are considered to be privacy preserving. In the case of vertical partitioned data this is no longer the case, and intermediate results (especially when combined) could cause privacy issues.

In this post we use Secure Sum (one of the basic building blocks of secure multi-party computation) as an example.

The Problem

Secure Sum Algorithm

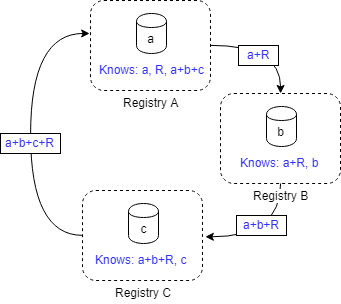

Let us assume that there are 3 registries (A, B and C having respectively a, b and c patients) which want to know the total number of patients of all registries (N=a+b+c) but do not want to share their number of patients with the other registries. However it is accepted that the other registries learn the average of the other two registries. An algorithm that solves this problem is:

- A generates a random number R

- A add this number to its patient count x1=a+R

- A shares x1 with registry B

- B adds its patient count x2=x1+b

- B shares x2 with registry C

- C adds its patient count x3=x2+c

- C shares x3 with registry A

- A subtracts the random number N=x3–R

- Optionally A shares the result N with B and C

Missing Infrastructure Features

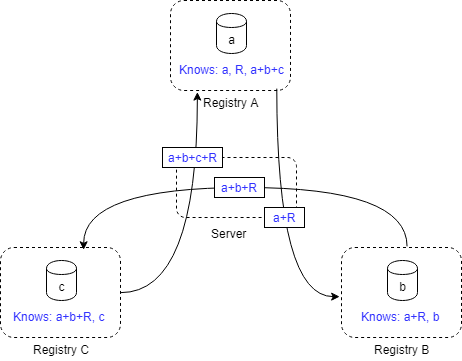

This algorithm works because no intermediate results are shared with anyone else than the intended receiver. All communication between nodes is handled by the server, thus the server can read the messages (x1, x2, x1 and N in this case) and thereby learning the number of patients of the individual registries, something we are trying avoid. If the messages are end-to-end encrypted, the server can no longer interpret them, but the intended receiver can.

Another missing feature is temporary storage, as registry A needs to remember the Random number it used to create intermediate result x1.

End-to-end Encryption

All messages between organizations need to be encrypted. Thus all nodes and users from a single organization use the same private-key to decrypt messages. Every organization publishes its public key at the server, so that other parties can send encrypted messages to them.

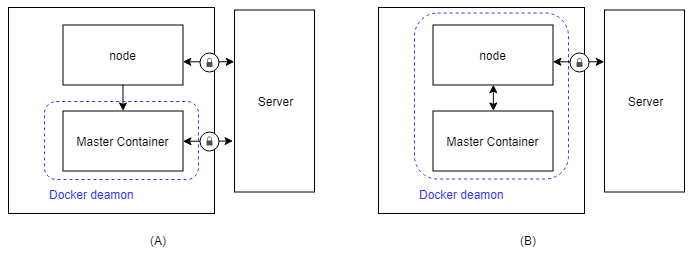

The current implementation of the Master Communication poses a issue. The Master container has an active internet connection (which was also raising questions), as it has to post results to the server and retrieve the results for the central part of the algorithm. The issue is that the Master container would require access to the private key of the organization to decrypt the results. This is especially troublesome as the master containers are could be developed by anyone and should therefore not be trusted with a private key. Preferably the encryption and decryption of messages should take place outside of the algorithm containers.

Another thing that should be taken into consideration is that the master container does not necessary run on a machine from the organization where the request came from.

(A) Current situation where the master container directly communicates with the server. (B) New situation where the container only communicates with the node instance. This way we can encrypt all outgoing messages for their receiver, and we can decrypt all messages that are intended for the master container by using the private key of the organization where the master container runs.

Temporary Storage

When a algorithm container is finished, the results are send back to the server. There is no way of sharing data between algorithm containers (not considering sending this data to the central server first, which is not always possible).

In our Secure Sum example above, the random value R needs to be stored somewhere at registry A so that it can be used in the final step. Each sequence of containers (initiated by a master container) shares the same run_id, this can be used to gain access to a docker volume specifically for this run.

Note that this temporary storage can also be used for chaining algorithms. For example, you first send a pre-processing algorithm container after which you send the actual analysis container.

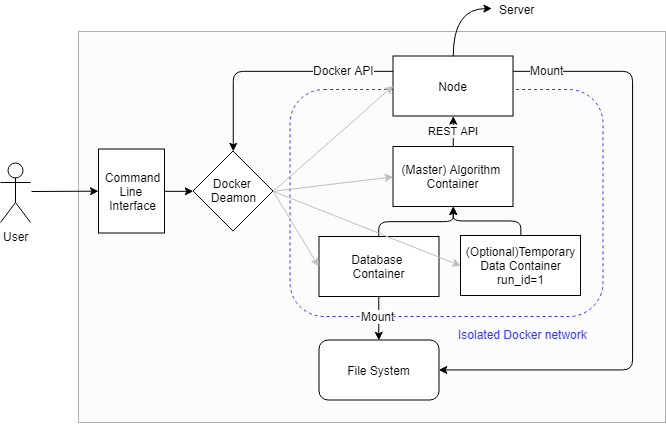

Infrastructure Design Changes

Database model Organization is update to contain a public key

Algorithm containers are isolated from the internet and can communicate only with the Vantage6-node instance that initiated them

The Vantage6-node needs an API interface which the algorithms can use to send encrypted messages to the central server. An algorithm needs to specify both the message and the receiver.

The Vantage6-node needs to be dockerized to be in the same network as the algorithm containers. The Vantage6-node instances will have access both to the private algorithm network as well as the internet.

The database at the node is stored in a docker volume. This can be replaced by a data-provider service at a later stage.

A temporary docker volume needs (optionally) be created for each run_id.

REST API at the server needs to be updated, to be able to provide nodes with the required public keys