Updating your algorithm from v4 to v5

Dec. 5, 2025The expected release of vantage6 v5.0 will make algorithms more modular and easier to maintain. Here's how to do it.

Continue reading

The expected release of vantage6 v5.0 will make algorithms more modular and easier to maintain. Here's how to do it.

Continue reading

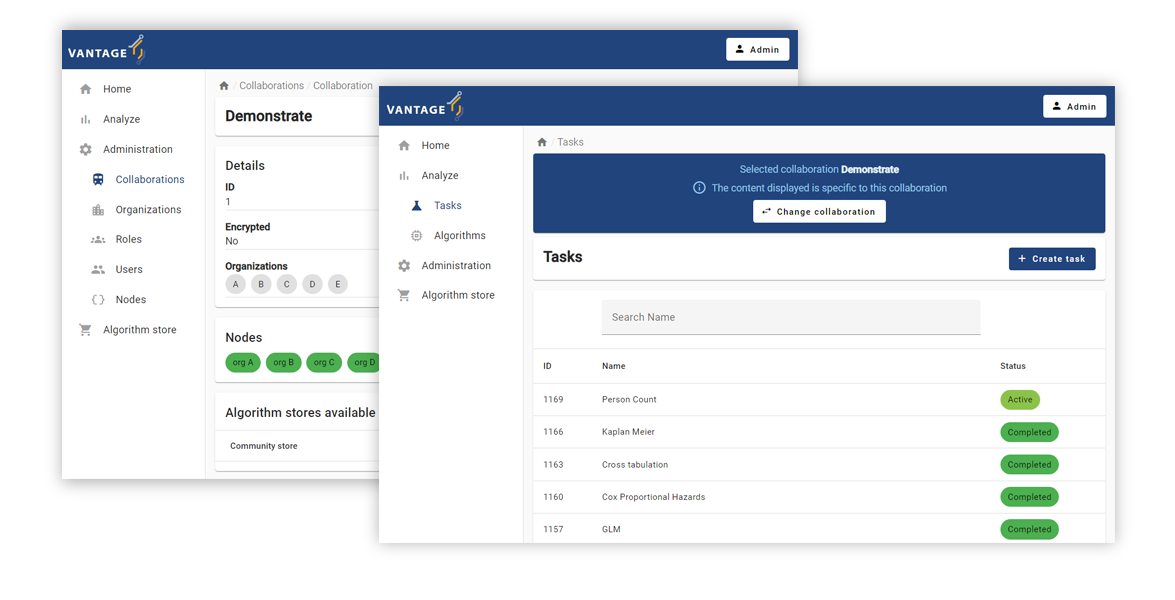

In vantage6 we've changed the task workflow for the better

The vantage6 infrastructure has a tight coupling with Docker. So why are we replacing Docker API with Kubernetes API

Last year my colleagues Luisa, Ermanno and I had the privilege of joining Peter Schmidt on the the code for thought podcast, where we discussed our work at the Netherlands eScience Center.